Problem

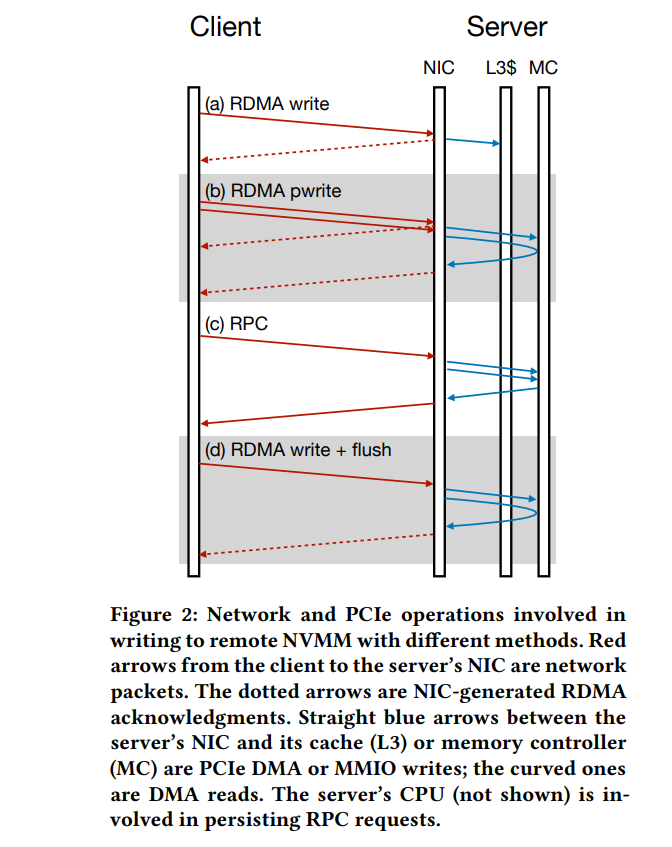

Due to RDMA NIC implementation, RNIC doesn't have remote persistent flush primitives. So one-sided write data from clients will write to the volatile cache on RNIC first and then RNIC directly sends ACK back before writing data to PM. As a result, a power loss will break remote data persistence easily.

Besides, one-sided commit[3] is immature or suffers poor performance.

Some researchers place this problem on the network systems level instead of the storage system level, and so ignore it. But for now, this problem does affect system availability.

Old methods

2-side RPC communication can avoid this problem, but 2-side ops can't fully deposit RNIC's performance and lack of scalability at the same time[1].

For 1-side ops, a strawman implementation is sending a write request followed by a read request. But the cost of 2 RTT is still too high.

New methods

[1] uses READ after WRITE, but with outstanding request[2] + doorbell batching[8] to process persistent WRITE request, which reduces latency from 4μs (2RTT) to 3μs.

- outstanding request: WR which was posted to a work queue and its completion was not polled (like unfinished requests?

- doorbell batching (just batching on RDMA

quote "Specifically, outstanding request [23] allows us using the completion of READ as the completion of the WRITE, as long as the two requests are sent to the same QP. Since the READ to persist the WRITE must be post to the same QP as the WRITE (§2.3), we no longer need to wait for the first WRITE to complete. Thus, this optimization reduces the wait time of the first network roundtrip. Applying outstanding request to persistent WRITE is correct because first, later READ flushes previously WRITE [19], and RNIC processes requests from the same QP in a FIFO order [6].

Based on outstanding request, doorbell batching [24] further allows us to send the READ and WRITE in one request using the more CPU and bandwidth efficient DMA, reducing the latency of posting RDMA requests.

On our testbed, a single one-sided RDMA request takes 2µs. Thus, a strawman implementation of remote persistent write uses 4µs. After applying H9, one-sided remote persistent write takes 3µs latency to finish"

[4] claims that for small persistent writes to remote NVMM, RPCs have comparable latency as one-sided RDMA.

note: the tricky part is that the first author of [4] is also the first author of outstanding request[2]… Maybe the reason is the different devices (CX3 and CX4+CX5)?

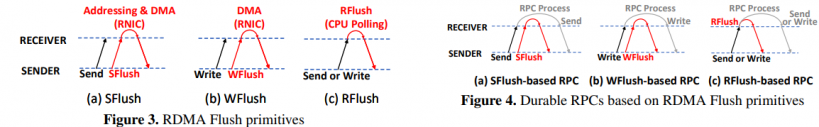

[5][10][11][12] use the RDMA WRITE_WITH_IMM verb to achieve remote persistence. So that servers will get the completion status and make data durable immediately.

note: WRITE_WITH_IMM can ensure atomicity since the data need to be confirmed by the extra involved server. On the other side, this imm is only 32-bit, which can't directly address the complete space.

like tranditional DB systems, there are some optimistic methods, like using redundancy check. [6] do CRC when reading to check data consistency.

To go a step further, [7] argues that CRC is expensive so they use a background thread to conduct integrity verification. (but this work is based on simulation…

check their brief intro in [9].

[9] built and test some emulated hardware-support RDMA primitives to support RMDA remote flush primitives(RPC).

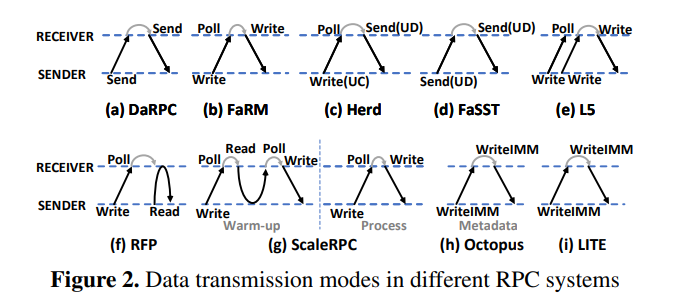

Popular RMDA RPC communication methods:

Theirs:

their work still relies on the existing RDMA primitives and the receiver's CPU to emulate RDMA RFlush primitives instead of programmable NIC.

?

Mellonx, gkd

refer

- Wei, Xingda, et al. "Characterizing and Optimizing Remote Persistent Memory with RDMA and NVM." Proceedings of the 2021 {USENIX} Annual Technical Conference ({USENIX}{ATC} 21). 2021.

- Kalia, Anuj, Michael Kaminsky, and David G. Andersen. "Using RDMA efficiently for key-value services." Proceedings of the 2014 ACM Conference on SIGCOMM. 2014.

- Kim, Daehyeok, et al. "Hyperloop: group-based NIC-offloading to accelerate replicated transactions in multi-tenant storage systems." Proceedings of the 2018 Conference of the ACM Special Interest Group on Data Communication. 2018.

- Kalia, Anuj, David Andersen, and Michael Kaminsky. "Challenges and solutions for fast remote persistent memory access." Proceedings of the 11th ACM Symposium on Cloud Computing. 2020.

- Lu, Youyou, et al. "Octopus: an rdma-enabled distributed persistent memory file system." 2017 {USENIX} Annual Technical Conference ({USENIX}{ATC} 17). 2017.

- Huang, Haixin, et al. "Forca: fast and atomic remote direct access to persistent memory." 2018 IEEE 36th International Conference on Computer Design (ICCD). IEEE, 2018.

- Du, Jingwen, et al. "Fast and Consistent Remote Direct Access to Non-volatile Memory." 50th International Conference on Parallel Processing. 2021.

- Kalia, Anuj, Michael Kaminsky, and David G. Andersen. "Design guidelines for high performance {RDMA} systems." 2016 {USENIX} Annual Technical Conference ({USENIX}{ATC} 16). 2016.

- Duan, Zhuohui, et al. "Hardware-Supported Remote Persistence for Distributed Persistent Memory." SC 2021.

- Shu, Jiwu, et al. "Th-dpms: Design and implementation of an rdma-enabled distributed persistent memory storage system." ACM Transactions on Storage (TOS) 16.4 (2020): 1-31.

- Liu, Xinxin, Yu Hua, and Rong Bai. "Consistent RDMA-Friendly Hashing on Remote Persistent Memory." ICCD 21.

- Yang, Jian, Joseph Izraelevitz, and Steven Swanson. "Orion: A distributed file system for non-volatile main memory and RDMA-capable networks." 17th {USENIX} Conference on File and Storage Technologies ({FAST} 19). 2019.