KD-Tree

KD-Tree is a classic method to index high-dimetional data (below 30, or the number of data is bigger than $2^k$).

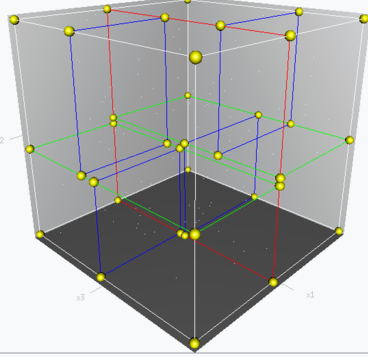

Structure

A simple search tree example is BST (Binary Search Tree), but only suitable for the 1-dim situation. This data structure splits data by comparing value. But we can't directly compare values between two high dimensional nodes.

So the problem is how to choose a single proper dimention to compare, split nodes and generate subtree. One classic algorithm is choose the median of the most spread dimension pivoting strategy. Calculate all variations in every dimensions, and choose the dimension with the biggest variation. The median value would be the pivot and help this binary tree become more balanced and fasten query.

So bascially, all data are stored in leaf nodes, and non-leaf nodes only provide a hyperplane to split data.

Query

After knowing the structure of KD-Tree, how to use it to find the nearest neighbor? When it comes to BST, we just go trough top-down approach and we would get the exact node.

In KD-Tree, at frist we run a normal BST-like top-down approach to find a "Best-For-Now (current best)" Node.

Then start a recurrence process:

- iterate all nodes in the old top-down path.

- compare the distance between (query_node, hyperplane_now), (query_node, Best-For-Now_node).

2.1 if the query node is closer to hyperplane, then start a new top-down approach in another subtrree, update "Best-For-Now" Node, and go back to 1.

Optimize

BBF (Best Bin First)

BBF makes this Nearest Neighbor algorithm become approximate. Original methods don't consider the relations among different hyperplanes. It just pulls everyone from stacks.

BBF sort all hyperplanes (also called "Bin") by the distance between bins and the query data.

Also, BBF set up a time limit to avoid unlimited searching.

Usage

KD-Tree is the most common method to implement KNN (k Nearest Neighbors).